Lenses Box¶

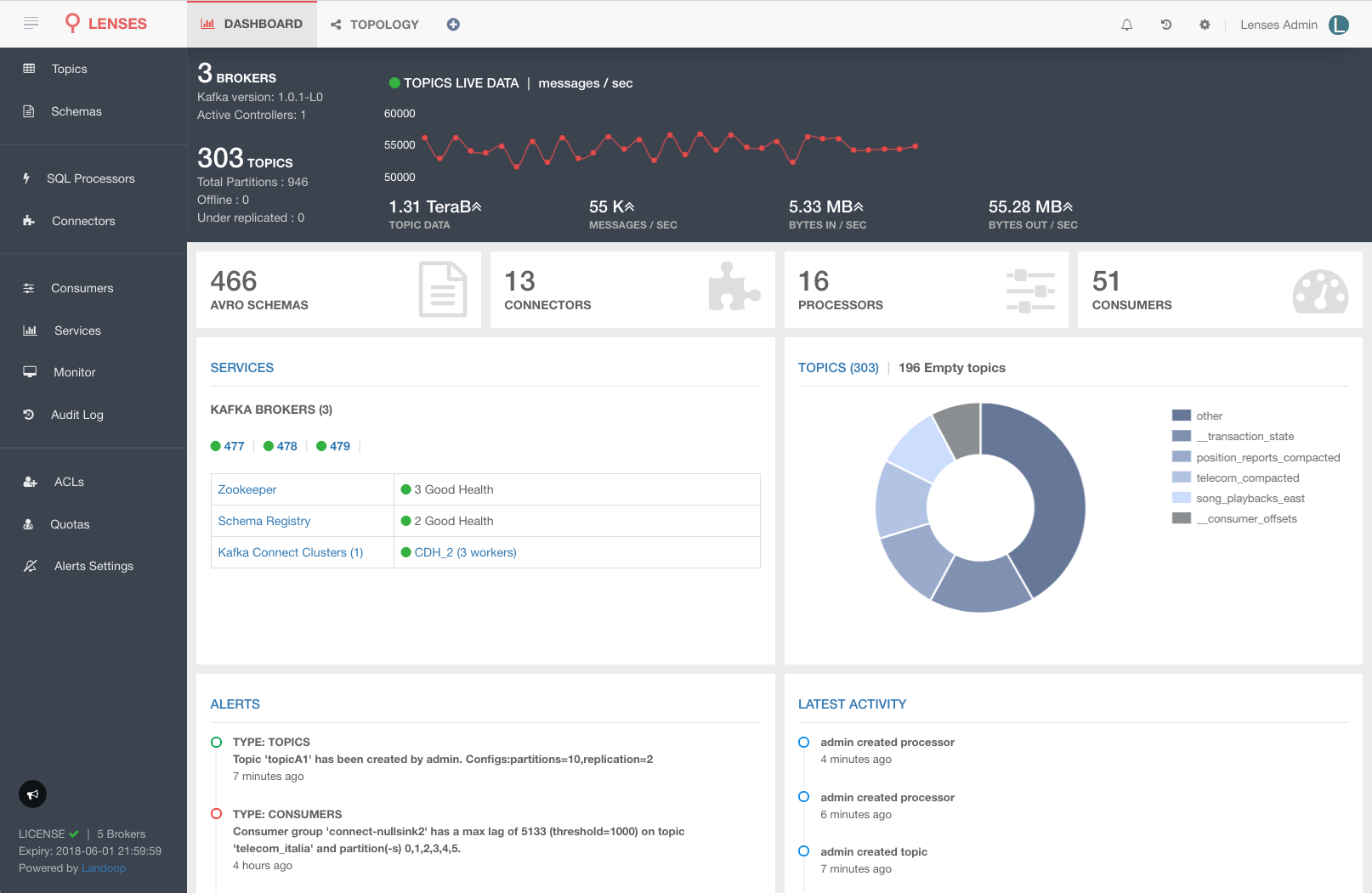

Lenses Box is a docker image which contains Lenses and a full installation of Kafka with all its relevant components. The image packs Landoop’s Stream Reactor Connector collection as well. It’s all pre-setup and the only requirement is having Docker installed. It contains Lenses, Kafka Broker, Schema Registry, Kafka Connect, 25+ Kafka Connectors with SQL support, CLI tools.

Prerequisites¶

Before you get started you will need to install Docker. Lenses Development Environment is a docker image that contains not only Lenses but all the relevant services that are pre-setup for your local development.

This container will work even on a low memory 8GB RAM machine. It takes 30-45 seconds for the services to become available and a couple of minutes for Kafka Connect to load all the connectors.

Don’t have Docker? Check installation instructions here

Getting Started¶

Step 1: Get Lenses Development Environment¶

To run Lenses Development Environment you will need to get your personalized token. Get it now via registering at:

Lenses Development EnvironmentStep 2: Single command, Up and Running with Examples¶

docker run --rm -p 3030:3030 --name=lenses-dev --net=host -e EULA="https://dl.lenses.stream/d/?id=CHECK_YOUR_EMAIL_FOR_KEY" landoop/kafka-lenses-dev

You can periodically check for new versions of Lenses:

docker pull landoop/kafka-lenses-dev

Note

Kafka Connect will require a few more minutes to start up since it iterates and loads all the available connectors.

Access Lenses UI¶

In order to access the Lenses web user interface, open your browser and navigate to http://localhost:3030.

Login with admin / admin and enjoy streaming!

Access Command Line¶

In order to access the various Kafka command-line tools, such as the console producer and consumer from a terminal in the container:

$ docker exec -it lenses-dev bash

root@fast-data-dev / $ kafka-topics --zookeeper localhost:2181 --list

Or directly:

docker exec -it lenses-dev kafka-topics --zookeeper localhost:2181 --list

If you enter the container, you will discover that we even provide bash auto-completion for some of the tools!

Check our cheat sheet for interacting with Kafka from the command line:

Access Kafka Broker¶

Making full use of the development environment means you should be able to access the Kafka instance inside the docker container. The way Docker and the Kafka brokers work requires a few extra settings. Kafka Broker advertises the endpoint accepting client connections and this endpoint must be accessible from your client. When running on macOS or Windows, docker runs inside a virtual machine which means there will be an extra networking layer in place.

If you run docker on macOS or Windows, you may need to find the address of the

VM running docker and export it as the advertised address for the broker (On

macOS it usually is 192.168.99.100). At the same time, you should give the

kafka-lenses-dev image access to the VM’s network:

docker run -e EULA="CHECK_YOUR_EMAIL_FOR_KEY" \

-e ADV_HOST="192.168.99.100" \

--net=host --name=lenses-dev \

landoop/kafka-lenses-dev

If you run on Linux you don’t have to set the ADV_HOST but you can do

something cool with it. If you set it to be your machine’s IP address you will

be able to access Kafka from any clients in your network. If you decide to run

kafka-lenses-dev in the cloud, you (and all your team) will be able to access

Kafka from your development machines. Just remember to provide the public IP of

your server!

Persist Data¶

If you want your Development environment to persist data between multiple

executions, provide a name for your docker instance and don’t set the container

to be removed automatically (--rm flag). For example:

docker run -p 3030:3030 -e EULA="CHECK_YOUR_EMAIL_FOR_KEY" \

--name=lenses-dev landoop/kafka-lenses-dev

Once you want to free up resources, just press Control-C. Now you have two options: either remove the Development Environment:

docker rm lenses-dev

Or use it at a later time and continue from where you left off:

docker start -a lenses-dev

Custom Hostname¶

If you are using docker-machine or setting this up in a Cloud or

DOCKER_HOST is a custom IP address such as 192.168.99.100, you will

need to use the parameters --net=host -e ADV_HOST=192.168.99.100.

docker run --rm -p 3030:3030 --net=host -e ADV_HOST=192.168.99.100 -e EULA="https://dl.lenses.stream/d/?id=CHECK_YOUR_EMAIL_FOR_KEY" landoop/kafka-lenses-dev

Running Examples¶

Lenses provides a SQL engine for Apache Kafka handling both batch and stream queries. You can find more details about it here.

View Example Topics¶

The Docker container has been setup to create and produce data to a handful of Kafka topics.

The producers (data generators) are enabled by default. In order to disable the examples from being executed

set the environment variable -e SAMPLEDATA=0 in the docker run command

Run SQL Processors¶

To see the SQL processors in action, the following LSQLs can be executed against the example topics:

SET autocreate = true;

INSERT INTO position_reports_Accurate

SELECT * FROM `sea_vessel_position_reports`

WHERE _vtype ='AVRO'

AND _ktype ='AVRO'

AND Accuracy IS true

SET autocreate = true;

INSERT INTO position_reports_latitude_filter

SELECT Speed, Heading, Latitude, Longitude, Radio

FROM `sea_vessel_position_reports`

WHERE _vtype = 'AVRO'

AND _ktype = 'AVRO'

AND Latitude > 58

SET autocreate = true;

INSERT INTO position_reports_MMSI_large

SELECT *

FROM `sea_vessel_position_reports`

WHERE _vtype = 'AVRO'

AND _ktype = 'AVRO'

AND MMSI > 100000

Of course, there is support for JSON messages as well:

SET autocreate = true;

INSERT INTO backblaze_smart_result

SELECT (smart_1_normalized + smart_3_normalized) AS sum1_3_normalized, serial_number

FROM `backblaze_smart`

WHERE _vtype = 'JSON'

AND _ktype = 'JSON'

AND _key.serial_number LIKE 'Z%'

Note

If custom serde are required, the procedure is the same as the landoop/lenses docker image custom serde setup.

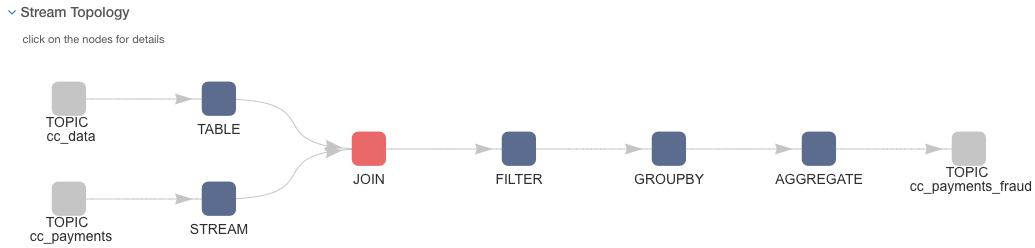

Topology If you now visit the Topology page in Lenses you will be able to view your first data pipelines:

LSQL processors translate to Kafka Streams application and it covers the entire Kafka Streams API functionality.

Setting consumer, producer and streaming properties, JOINING and aggregating can be seen in this example:

SET `autocreate`=true;

SET `auto.offset.reset`='earliest';

SET `commit.interval.ms`='120000';

SET `compression.type`='snappy';

INSERT INTO `cc_payments_fraud`

WITH tableCards AS (

SELECT *

FROM `cc_data`

WHERE _ktype='STRING' AND _vtype='AVRO' )

SELECT STREAM

p.currency,

sum(p.amount) as total,

count(*) usage

FROM `cc_payments` AS p LEFT JOIN tableCards AS c ON p._key = c._key

WHERE p._ktype='STRING' AND p._vtype='AVRO' and c.blocked is true

GROUP BY tumble(1,m), p.currency

View the topology¶

The processor detail view displays the stream topology!, so you can see how your Kafka Streams application works. By clicking on any node of the topology, you can review the node configuration or view topic data. You can find a bit more in-depth information on the above query here

Connectors¶

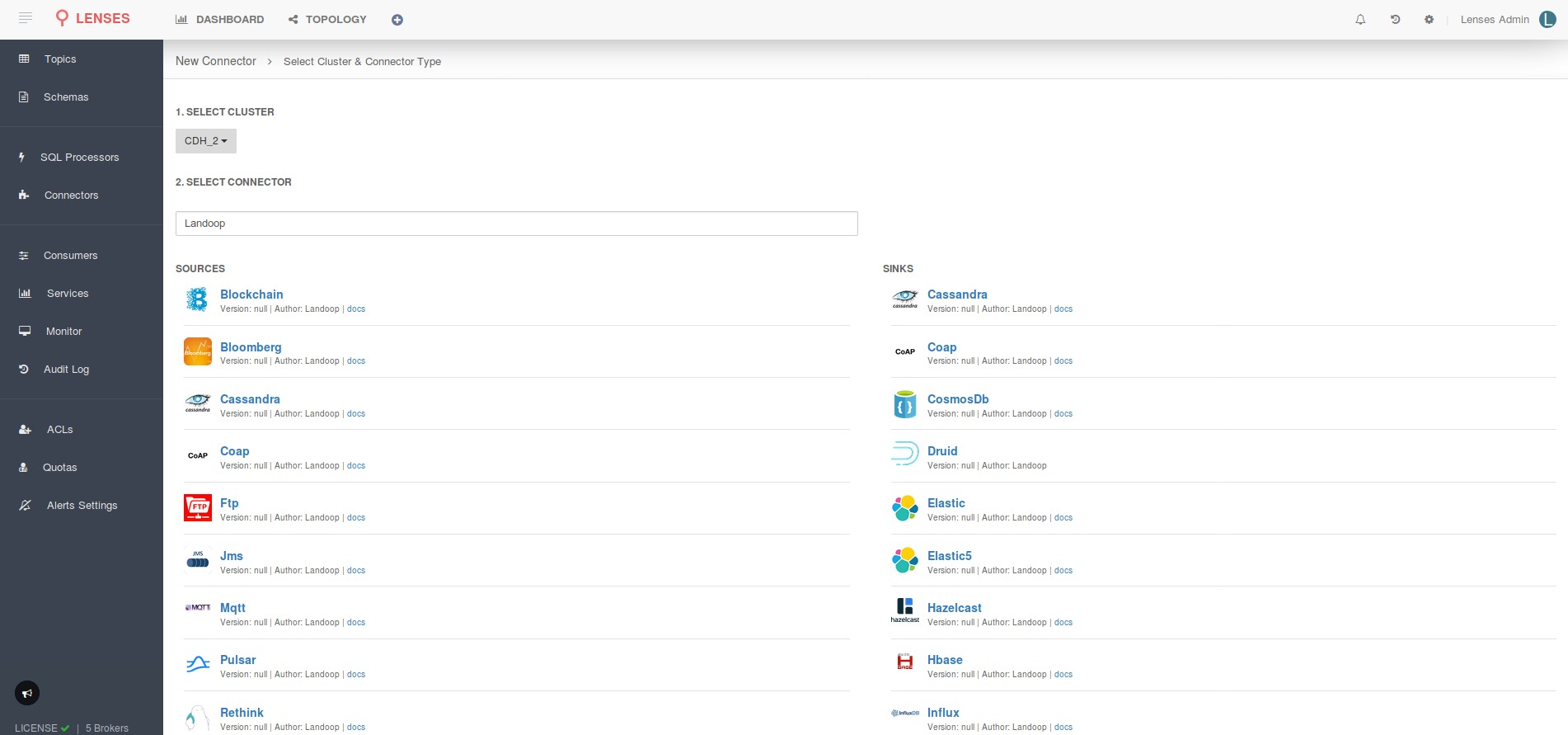

The docker image ships with almost 25 Kafka Connectors. You will have one Kafka Connect Worker with all the connectors pre-setup in the classpath so they are ready to be used. Follow the instructions for each connector in order to set their configuration and launch them via the Lenses UI or via the Rest endpoints.

FAQ¶

1. How can I run the Development Environment offline?

To run the docker offline, save the download access token to a file when downloading from https://landoop.com/downloads/lenses and run this command:

LFILE=`cat license.json`

docker run --rm -it -p 3030:3030 -e LICENSE="$LFILE" landoop/kafka-lenses-dev:latest

2. How much memory does Lenses require?

Lenses has been built with “mechanical sympathy” in mind. Lenses can operate with 4GB RAM memory limit while handling a cluster setup containing 30 Kafka Brokers, more than 10K AVRO Schemas, more than 1000 topics and tens of Kafka Connect connector instances.

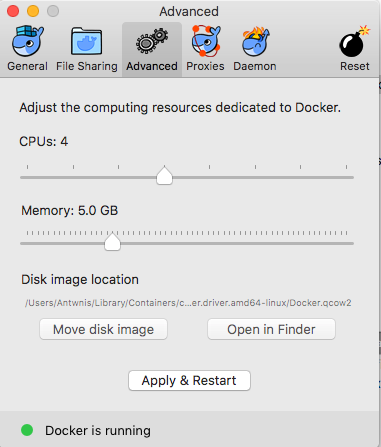

The Development Environment is running multiple services in a container: Kafka, ZooKeeper, Schema Registry, Kafka Connect, synthetic data generators and of course Lenses. That means that the recommendation is 5GB of RAM, although it can operate with even less than 4GB (your mileage might vary).

To reduce the memory footprint, it is possible to disable some connectors and shrink the Kafka Connect heap size by applying these

options (choose connectors to keep) to the docker run command:

-e DISABLE=azure-documentdb,blockchain,bloomberg,cassandra,coap,druid,elastic,elastic5,ftp,hazelcast,hbase,influxdb,jms,kudu,mongodb,mqtt,redis,rethink,voltdb,yahoo,hdfs,jdbc,elasticsearch,s3,twitter

-e CONNECT_HEAP=512m

3. How can I connect Lenses to my existing Apache Kafka cluster?

To run Lenses linked to your existing Apache Kafka cluster, you will need to contact us in order to receive the Enterprise Edition which adds

Kubernetes support, extra monitoring, extra LSQL processor execution modes and more.

4. Why does my Developer Environment have topics?

To make your experience better, we have pre-configured a set of synthetic data generators to get streaming data out of the box.

By default the docker image will launch the data generators;

however you can have them off by setting the environment variable -e SAMPLEDATA=0 in the docker run command.

5. Is the Development Environment free?

Developer licenses are free. You can get one or more licenses from our [website](https://www.landoop.com). The license will expire in six months from the moment you have obtained it. Renewing your license is free! You just have to re-register. You may start your Lenses instance with a different license file and your setup will not be affected.

There are three ways to provide the license file.

- The first one, which we already saw, uses

EULA:

-e EULA="[EULA]"

* If you instead choose to save the license file locally you can provide the file path:

-v /path/to/license.json:/license.conf

* You can provide the license as an environment variable:

-e LICENSE="$(cat /path/to/license.json)"