4.2

Kafka Brokers

Lenses supports all security protocols of Kafka. When it comes to security mechanisms for SASL authentication the most common are well supported.

Select your security protocol and authentication mechanism:

lenses.kafka.brokers = "PLAINTEXT://host1:9092,PLAINTEXT://host2:9092"

A list of bootstrap servers (brokers) is required as well as the security protocol. It is recommended to add as many brokers (if available) as convenient to this list for fault tolerance.

A truststore may be explicitly set if the global truststore of Lenses does not include the Certificate Authority (CA) of the brokers.

lenses.kafka.brokers = "SSL://host1:9093,SSL://host2:9093"

lenses.kafka.settings.client.security.protocol = SSL

lenses.kafka.settings.client.ssl.truststore.location = "/var/private/ssl/client.truststore.jks"

lenses.kafka.settings.client.ssl.truststore.password = "changeit"

If TLS is used for authentication to the brokers in addition to encryption-in-transit, a keystore is required.

lenses.kafka.settings.client.ssl.keystore.location = "/var/private/ssl/client.keystore.jks"

lenses.kafka.settings.client.ssl.keystore.password = "changeit"

lenses.kafka.settings.client.ssl.key.password = "changeit"

A list of bootstrap servers (brokers) is required as well as the security protocol. It is recommended to add as many brokers (if available) as convenient to this list for fault tolerance.

When encryption-in-transit is used (SASL_SSL), a truststore may be explicitly

set if the global truststore of Lenses does not include the CA of the brokers.

lenses.kafka.brokers = "SASL_PLAINTEXT://host1:9094,SASL_PLAINTEXT://host2:9094"

lenses.kafka.settings.client.security.protocol = SASL_PLAINTEXT

# May be required when security protocol is SASL_SSL

#lenses.kafka.settings.client.ssl.truststore.location = "/var/private/ssl/client.truststore.jks"

#lenses.kafka.settings.client.ssl.truststore.password = "changeit"

For Kerberos (GSSAPI) a JAAS configuration and a keytab file are required. Kerberos authentication via credentials or cache is not supported. If the Kafka cluster uses an authorizer (ACLs), it is advised to use the same principal as the brokers to get the most out of Lenses ( see ACLS ).

lenses.kafka.settings.client.sasl.jaas.config="""

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

keyTab="/path/to/keytab-file"

storeKey=true

useTicketCache=false

serviceName="kafka"

principal="principal@MYREALM";

"""

When using Docker or Helm, the triple quotes will be added automatically.

The Kerberos configuration file should be set explicitly if it is not at the

default location (/etc/krb5.conf).

export LENSES_OPTS="-Djava.security.krb5.conf=/path/to/krb5.conf"

A list of bootstrap servers (brokers) is required as well as the security protocol and SASL mechanism. It is recommended to add as many brokers (if available) as convenient to this list for fault tolerance.

When encryption-in-transit is used (SASL_SSL), a truststore may be explicitly

set if the global truststore of Lenses does not include the CA of the brokers.

lenses.kafka.brokers = "SASL_PLAINTEXT://host1:9094,SASL_PLAINTEXT://host2:9094"

lenses.kafka.settings.client.security.protocol = SASL_PLAINTEXT

lenses.kafka.settings.client.sasl.mechanism = "SCRAM-SHA-256"

# May be required when security protocol is SASL_SSL

#lenses.kafka.settings.client.ssl.truststore.location = "/var/private/ssl/client.truststore.jks"

#lenses.kafka.settings.client.ssl.truststore.password = "changeit"

SASL requires a JAAS configuration file. If the Kafka cluster uses an authorizer (ACLs), it is advised to use the same principal as the brokers to get the most out of Lenses ( see ACLS ).

lenses.kafka.settings.client.sasl.jaas.config="""

org.apache.kafka.common.security.scram.ScramLoginModule required

username="[USERNAME]"

password="[PASSWORD]"

serviceName="kafka";

"""

When using Docker or Helm, the triple quotes will be added automatically.

A list of bootstrap servers (brokers) is required as well as the security protocol and SASL mechanism. It is recommended to add as many brokers (if available) as convenient to this list for fault tolerance.

When encryption-in-transit is used (SASL_SSL), a truststore may be explicitly

set if the global truststore of Lenses does not include the CA of the brokers.

lenses.kafka.brokers = "SASL_PLAINTEXT://host1:9094,SASL_PLAINTEXT://host2:9094"

lenses.kafka.settings.client.security.protocol = SASL_PLAINTEXT

lenses.kafka.settings.client.sasl.mechanism = "PLAIN"

# May be required when security protocol is SASL_SSL

#lenses.kafka.settings.client.ssl.truststore.location = "/var/private/ssl/client.truststore.jks"

#lenses.kafka.settings.client.ssl.truststore.password = "changeit"

SASL requires a JAAS configuration file. If the Kafka cluster uses an authorizer (ACLs), it is advised to use the same principal as the brokers to get the most out of Lenses ( see ACLS ).

lenses.kafka.settings.client.sasl.jaas.config="""

org.apache.kafka.common.security.plain.PlainLoginModule required

username="[USERNAME]"

password="[PASSWORD]"

serviceName="kafka";

"""

When using Docker or Helm, the triple quotes will be added automatically.

When Lenses is deployed via the AWS Marketplace , Kafka Brokers configuration is retrieved automatically.

When the Marketplace deployment is not used, manual configuration is required. Login to MSK via the AWS Console, get the Broker addresses and security settings and apply them to Lenses.

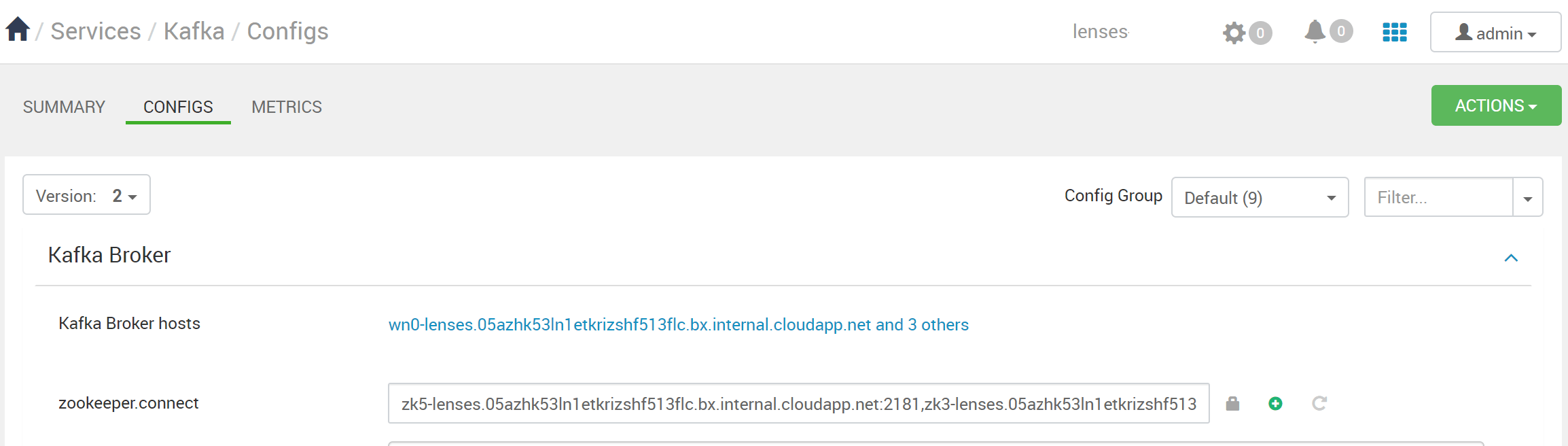

When Lenses is deployed via the HDInsight Marketplace, Kafka Brokers configuration is retrieved automatically.

For manual installations, or installations via the Azure marketplace , login to HDInsight’s Ambari dashboard, get the Broker addresses and security settings and apply them to Lenses.

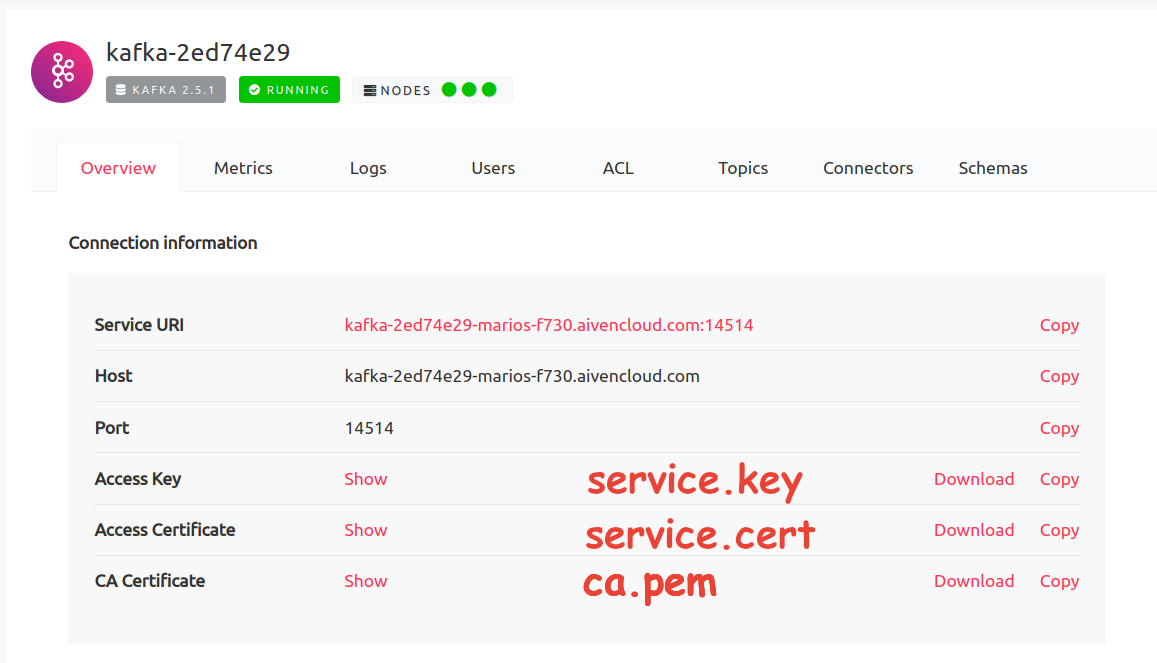

Aiven requires authentication to the Kafka Brokers via the SSL security protocol. A private key and client certificate pair, as well as CA certificate are provided, in PEM format. They can be downloaded from Aiven’s Console.

In the services list select the Kafka Service for Lenses, then download the CA

Certificate (ca.pem) from the Overview tab. Next is the private key

(service.key) and client certificate (service.cert) pair which can be

downloaded either from the Overview tab for the avnadmin account, or the

Users tab for any user.

If you are installing Lenses from an archive, you must convert the PEM files to Java Key and Trust stores. Use the openssl and Java keytool to convert the files. Lenses Docker can perform these conversions automatically .

# Note we also set a password: 'changeit'.

openssl pkcs12 -export \

-in service.cert -inkey service.key \

-out service.p12 \

-name service \

-passout pass:changeit

# Use keytool to convert the PKCS12 file to a Java keystore file.

# Note we also set the password to 'changeit'.

keytool -importkeystore -noprompt -v \

-srckeystore service.p12 -srcstoretype PKCS12 -srcstorepass changeit \

-alias service \

-deststorepass changeit -destkeypass changeit -destkeystore service.jks

# Use keytool to convert the CA certificate to a Java keystore file.

# Note we also set the password to 'changeit'.

keytool -importcert -noprompt \

-keystore truststore.jks \

-alias aiven-cluster-ca \

-file ca.pem \

-storepass changeit

Aiven uses ACLs managed by the Aiven Console to configure access to the Kafka Service. It is recommended to use either the admin account (avnadmin), or assign admin rights to all topics for the Lenses user. A limited set of permissions may be used with the corresponding degradation of features. See ACLS for more information.

Proceed to add the Aiven Kafka Host and Port to lenses.conf, the security

protocol, and the key and truststore files.

lenses.kafka.brokers = "SSL://host:port"

lenses.kafka.settings.client.security.protocol = SSL

lenses.kafka.settings.client.ssl.truststore.location = "/path/to/truststore.jks"

lenses.kafka.settings.client.ssl.truststore.password = "changeit"

lenses.kafka.settings.client.ssl.keystore.location = "/path/to/keystore.jks"

lenses.kafka.settings.client.ssl.keystore.password = "changeit"

lenses.kafka.settings.client.ssl.key.password = "changeit"

IBM EventStreams use the SASL/PLAIN mechanism for Broker authentication. A set of Service Credentials is required. It can be obtained following EventStreams documentation . The Manager role is advised for the Service Credentials of Lenses.

In lenses.conf set the Broker addresses, the security protocol and mechanism.

lenses.kafka.brokers = "SASL_SSL://host1:9096,SASL_SSL://host2:9096"

lenses.kafka.settings.client.security.protocol = "SASL_SSL"

lenses.kafka.settings.client.sasl.mechanism = "PLAIN"

lenses.kafka.settings.client.sasl.jaas.config="""

org.apache.kafka.common.security.plain.PlainLoginModule required

username="[USERNAME]"

password="[PASSWORD]"

serviceName="kafka";

"""

When using Docker or Helm, the triple quotes for the JAAS section will be added automatically.

Confluent Cloud uses the SASL/PLAIN mechanism for Broker authentication. An API key and secret pair is required. It can be obtained following Confluent Cloud documentation .

Confluent Cloud uses standard Kafka ACLs. It is advised to give Lenses account access to all resources. A limited set of permissions may be used with the corresponding degradation of features. See ACLS ) for more information on the permissions required.

In lenses.conf set the Broker addresses, the security protocol and mechanism.

lenses.kafka.brokers = "SASL_SSL://host1:9096,SASL_SSL://host2:9096"

lenses.kafka.settings.client.security.protocol = "SASL_SSL"

lenses.kafka.settings.client.sasl.mechanism = "PLAIN"

lenses.kafka.settings.client.sasl.jaas.config="""

org.apache.kafka.common.security.plain.PlainLoginModule required

username="[USERNAME]"

password="[PASSWORD]"

serviceName="kafka";

"""

# Settings required by Confluent

lenses.kafka.settings.client.client.dns.lookup = "use_all_dns_ips"

lenses.kafka.settings.client.linger.acks = "all"

lenses.kafka.settings.client.linger.ms = 5

# Settings recommended by Confluent

lenses.kafka.settings.client.reconnect.backoff.max.ms = 30000

lenses.kafka.settings.client.default.api.timeout.ms = 300000

When using Docker or Helm, the triple quotes for the JAAS section will be added automatically.

Enabling JMX on your Brokers

Enable JMX by exporting the port as an environment variable via JMX_PORT:

export JMX_PORT=[JMX_PORT]

To enable remote access to JMX export the KAFKA_JMX_OPTS environment variable:

export KAFKA_JMX_OPTS="-Dcom.sun.management.jmxremote -Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.ssl=false -Dcom.sun.management.jmxremote.local.only=false -Dcom.sun.management.jmxremote.rmi.port=[JMX_PORT]"

lenses.kafka.metrics.default.port = 9581

lenses.kafka.metrics = {

ssl: true, # Optional, please make the remote JMX certificate

# is accepted by the Lenses truststore

user: "admin", # Optional, the remote JMX user

password: "admin", # Optional, the remote JMX password

type: "JMX",

default.port: 9581

}

}

lenses.kafka.metrics = {

ssl: true, # Optional, please make the remote JMX certificate

# is accepted by the Lenses truststore

user: "admin", # Optional, the Jolokia user if required

password: "admin", # Optional, the Jolokia password if required

type: "JOLOKIAP", # 'JOLOKIAP' for the POST API, 'JOLOKIAG' for the GET API

default.port: 19999,

http.timeout.ms: 60000, # Optional, how long to wait for a metrics response (defaults to 60000 ms)

}

}

Amazon MSK uses Open Monitoring to expose JMX.

If Lenses is deployed through the Marketplace the kafka broker jmx ports are automatically configured. For a manual integration, enable open monitoring on MSK via the AWS console and set lenses.kafka.metrics:

lenses.kafka.metrics = {

type: "AWS",

port: [

{id: <broker-id-1>, url:"http://b-1.<broker.1.endpoint>:11001/metrics"},

{id: <broker-id-2>, url:"http://b-2.<broker.2.endpoint>:11001/metrics"},

{id: <broker-id-3>, url:"http://b-3.<broker.1.endpoint>:11001/metrics"}

],

aws.cache.ttl.ms: 60000, # Optional, the time to live of the Lenses MSK metrics cache (defaults to 60000 ms)

http.timeout.ms: 60000 # Optional, how long to wait for a metrics response (defaults to 60000 ms)

}

In order to fetch the Brokers IDs and the Prometheus endpoints, you need to use AWS CLI with the following command:

aws kafka list-nodes --cluster-arn <your-msk-cluster-arn>

By default, Lenses caches the MSK Open Monitoring metrics for one minute. This cache expiration time can be overridden through the lenses.kafka.metrics.http.timeout.ms config key.

If Lenses is deployed through the HDInsight Marketplace, the Kafka broker JMX ports are automatically configured.

If you are deploying on outside of the HDInsight Marketplace or via the Azure marketplace , log on to your HDInsights cluster Ambari dashboard and retrieve the broker URLs and ports, and update in the lenses.conf file.

lenses.kafka.metrics = {

ssl: true, # Optional, please make the remote JMX certificate

# is accepted by the Lenses truststore

user: "admin", # Optional, the remote JMX user

password: "admin", # Optional, the remote JMX password

type: "JMX",

default.port: 9581

}

}

Aiven provides access to JMX over HTTP via the Jolokia agent. Enable Jolokia in Aiven Cloud .

lenses.kafka.metrics = {

ssl: true, # Optional, please make the remote JMX certificate

# is accepted by the Lenses truststore

user: "admin", # Optional, the Jolokia user if required

password: "admin", # Optional, the Jolokia password if required

type: "JOLOKIAP" # 'JOLOKIAP' for the POST API, 'JOLOKIAG' for the GET API

default.port: 19999

}

}