FTP Source¶

Download connector FTP Connector 1.2 for Kafka FTP Connector 1.1 for Kafka

| Blog: | Polling FTP location and bringing data into Kafka |

|---|

Kafka Connector that monitors files on an FTP server and feeds changes into Kafka.

Provide the remote directories and on specified intervals, the list of files in the directories is refreshed. Files are downloaded when they were not known before, or when their timestamp or size are changed. Only files with a timestamp younger than the specified maximum age are considered. Hashes of the files are maintained and used to check for content changes. Changed files are then fed into Kafka, either as a whole (update) or only the appended part (tail), depending on the configuration. Optionally, file bodies can be transformed through a pluggable system prior to putting it into Kafka.

Data Types¶

Each Kafka record represents a file and has the following types.

- The format of the keys is configurable through connect.ftp.keystyle=string|struct. It can be a string with the file name, or a FileInfo structure with name: string and offset: long. The offset is always 0 for files that are updated as a whole, and hence only relevant for tailed files.

- The values of the records contain the body of the file as bytes.

Installing the Connector¶

Connect, in production should be run in distributed mode

- Install and configure a Kafka Connect cluster

- Create a folder on each server called

plugins/lib - Copy into the above folder the required connector jars from the stream reactor download

- Edit

connect-avro-distributed.propertiesin theetc/schema-registryfolder and uncomment theplugin.pathoption. Set it to the root directory i.e. plugins you deployed the stream reactor connector jars in step 2. - Start Connect,

bin/connect-distributed etc/schema-registry/connect-avro-distributed.properties

Connect Workers are long running processes so set an init.d or systemctl service accordingly.

Lenses QuickStart¶

The easiest way to try out this is using Lenses Box the pre-configured docker, that comes with this connector pre-installed. You would need to Connectors –> New Connector –> Source –> FTP and paste your configuration

Configuration¶

In addition to the general configuration for Kafka connectors (e.g. name, connector.class, etc.) the following options are available.

| Config | Description | Type |

|---|---|---|

connect.ftp.address |

host[:port] of the ftp server | string |

connect.ftp.user |

Username to connect with | string |

connect.ftp.password |

Password to connect with | string |

connect.ftp.refresh |

iso8601 duration that the server is polled | string |

connect.ftp.file.maxage |

iso8601 duration for how old files can be | string |

connect.ftp.keystyle |

SourceRecord keystyle, string or struct | string |

Optional configurations

| Config | Description | Type |

|---|---|---|

connect.ftp.monitor.tail |

Comma separated list of path:destinationtopic to tail | string |

connect.ftp.monitor.update |

Comma separated list of path:destinationtopic to monitor for updates | string |

connect.ftp.sourcerecordconverter |

Source Record converter class name | string |

connect.ftp.filter |

Regular expression to use when selecting files for processing ignoring file which do not match. If not set, defaults to ‘.*’. | string (regex) |

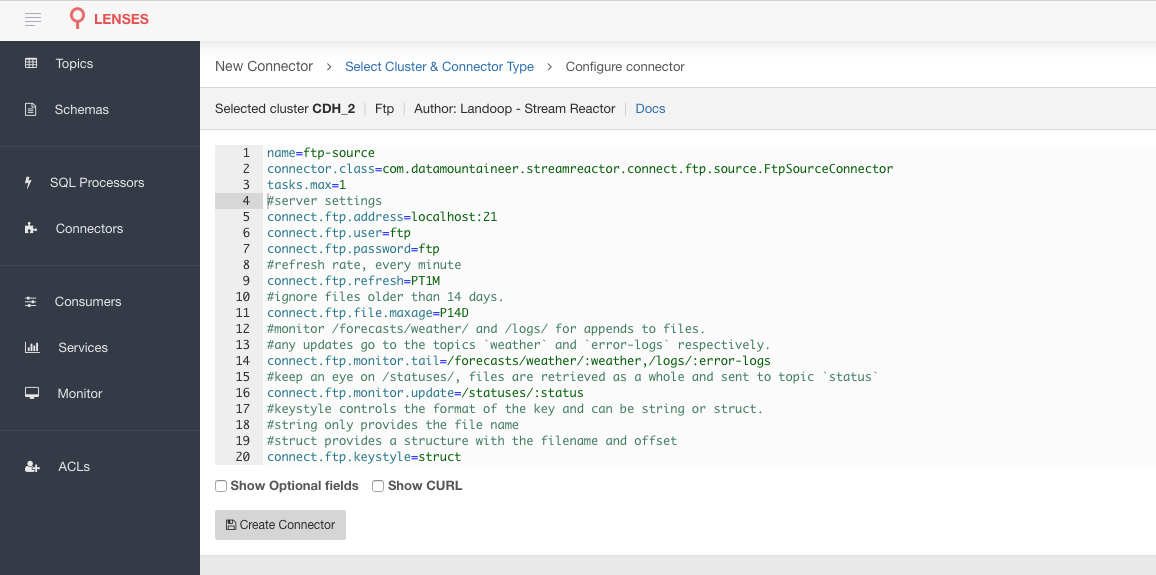

name=ftp-source

connector.class=com.datamountaineer.streamreactor.connect.ftp.source.FtpSourceConnector

tasks.max=1

#server settings

connect.ftp.address=localhost:21

connect.ftp.user=ftp

connect.ftp.password=ftp

#refresh rate, every minute

connect.ftp.refresh=PT1M

#ignore files older than 14 days.

connect.ftp.file.maxage=P14D

#monitor /forecasts/weather/ and /logs/ for appends to files.

#any updates go to the topics `weather` and `error-logs` respectively.

connect.ftp.monitor.tail=/forecasts/weather/:weather,/logs/:error-logs

#keep an eye on /statuses/, files are retrieved as a whole and sent to topic `status`

connect.ftp.monitor.update=/statuses/:status

#keystyle controls the format of the key and can be string or struct.

#string only provides the file name

#struct provides a structure with the filename and offset

connect.ftp.keystyle=struct

Tailing Versus Update as a Whole¶

The following rules are used.

- Tailed files are only allowed to grow. Bytes that have been appended to it since the last inspection are yielded. Preceding bytes are not allowed to change;

- Updated files can grow, shrink and change anywhere. The entire contents are yielded.

Note

All credits for this connector go to Eneco’s team, where this connector was forked from!

Data Converters¶

Instead of dumping whole file bodies (and the danger of exceeding Kafka’s message.max.bytes), one might want to give an interpretation to the data contained in the files before putting it into Kafka. For example, if the files that are fetched from the FTP are comma-separated values (CSVs), one might prefer to have a stream of CSV records instead. To allow to do so, the connector provides a pluggable conversion of SourceRecords. Right before sending a SourceRecord to the Connect framework, it is run through an object that implements:

package com.datamountaineer.streamreactor.connect.ftp

trait SourceRecordConverter extends Configurable {

def convert(in:SourceRecord) : java.util.List[SourceRecord]

}

(for the Java people, read: interface instead of trait).

The default object that is used is a pass-through converter, an instance of:

class NopSourceRecordConverter extends SourceRecordConverter{

override def configure(props: util.Map[String, _]): Unit = {}

override def convert(in: SourceRecord): util.List[SourceRecord] = Seq(in).asJava

}

To override it, create your own implementation of SourceRecordConverter, put the jar into your $CLASSPATH and instruct the connector to use it via the .properties:

connect.ftp.sourcerecordconverter=your.name.space.YourConverter

Tip

To learn more about the FTP Kafka connector read this excellent blog

TroubleShooting¶

Please review the FAQs and join our slack channel