Basic Concepts¶

This page will teach you some basic concepts that can help you understand Lenses and work more efficiently.

Lenses¶

Lenses is a JVM application that ships as a Linux archive, Docker container, Kubernetes Helm Chart or Cloud distribution that requires connectivity to your Kafka cluster and peripheral services in order to operate.

Apache Kafka¶

Apache Kafka is an open source distributed streaming platform built around an abstraction of a commit log. Apache Kafka provides the pipes through which data can be integrated, processesed and analysed in real time. Kafka is one of several systems that are components of a Data platform.

You can learn more about Kafka here.

Kafka Topic¶

A topic is a unit of storage in Kafka. A topic is sharded, hence you have a topic partition. You can think of a Kafka topic-partition tuple as an append log. All data in Kafka is stored in Topics.

Kafka Producer¶

Any application that stores data in a Kafka topic is called a data producer. We say that a Kafka producer publishes messages to a Kafka topic.

Kafka provides consumer APIs which are used in several parts of the Kafka ecosystem, Kafka Connect, Kafka Streams as well as custom made applications.

Lenses uses the APIs to allow data to be inserted into Kafka.

Kafka Consumer¶

A Kafka Consumers are applications that read data from Kafka. Kafka provides consumer APIs which are used in several parts of the Kafka ecosystem, Kafka Connect, Kafka Streams as well as custom made applications.

You can either use a the command line consumer to simply read data, however, it will print to the terminal is not a user friendly experience and makes access and visibility to the data difficult, especially for none developers. A key pillar of DataOps is visibility for all.

As with Kafka Producers, Lenses uses the APIs Kafka provides to enable data visibility via SQL on streaming systems like Kafka.

Kafka Connect¶

Kafka Connect is a common framework that allows the moving of data and in and out of Kafka, to and from a system.

Lenses Kafka Connectors¶

A Lenses Kafka Connector (a.k.a. Stream Reactor) is any Kafka Connector offered by Lenses. Connectors allow you to move data into (Sources) Kafka and out of Kafka (Sinks). Lenses connectors support KCQL (Kafka Connect Query Language), which is an open source component of Lenses SQL Engine.

All available Lenses Kafka Connectors have an Open Source Apache 2 license.

We are proud of the rich list of available connectors and for enriching that list all the time!

The Lenses SQL Engine

In order to support the distinction for data at rest and data in motion, the Lenses SQL engine in split into three components:

- Table/Continuous based query engine, which is for table and continuous live stream queries (Lenses SQL).

- Streaming based engine, which is for continuous processing (Lenses Processors).

- Kafka Connect based engine.

Lenses SQL¶

Lenses SQL is used for querying data, that is, data that is stored in Kafka topics. You can learn more about Lenses SQL at the Lenses SQL documentation page.

Lenses Processors¶

Lenses SQL processors are Lenses continuous processors that can be deployed in several modes to process, in realtime, data flowing through a streaming layer. This continuous execution enables scenarios, such as the ability for applications to continuously join, filter and aggregate data and write the results back to the stream layer, Kafka. The processors are defined in SQL.

As live data, in a stream in unbound, it can never end, you have to restrict it some way for certain types of queries such as aggregations. The term for the restriction of streaming data is called windowing. Lenses support the following kinds of windowing:

- Hopping time windows

- Tumbling time windows

- Sliding windows, which are only used for joining data streams

- Session windows

Please note you have continuous processors over streaming data that require no time bounds, For example, filtering, projections and functions, such as the following:

SELECT *

FROM A

WHERE x > 100

Lenses SQL Processors implement both the Kafka Consumer and Producer API.

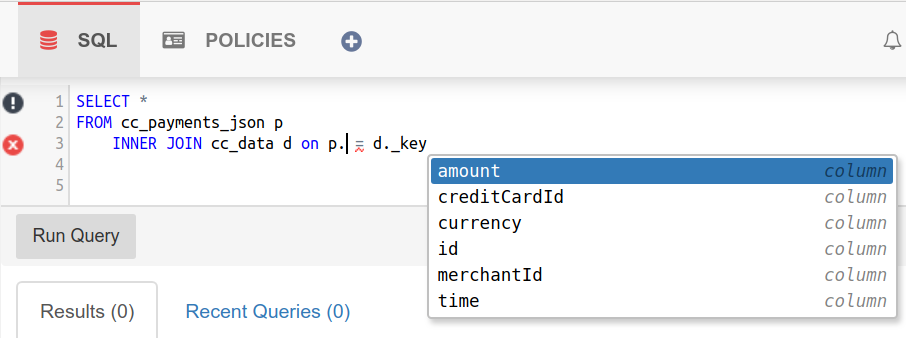

Intellisense¶

Intellisense is the coding assistance offered by Lenses and its SQL engine. The main purpose of Intellisense is to make your coding experience more productive and enjoyable.

Avro¶

Strictly speaking, Apache Avro is a data serialization framework. Avro messages are defined with a schema. Lenses provides integration and management of the schemas.

This Quick Tour will not use Avro; however, Avro is a key component of any Data platform and Lenses provides support.

You can learn more about Avro here.