Overview

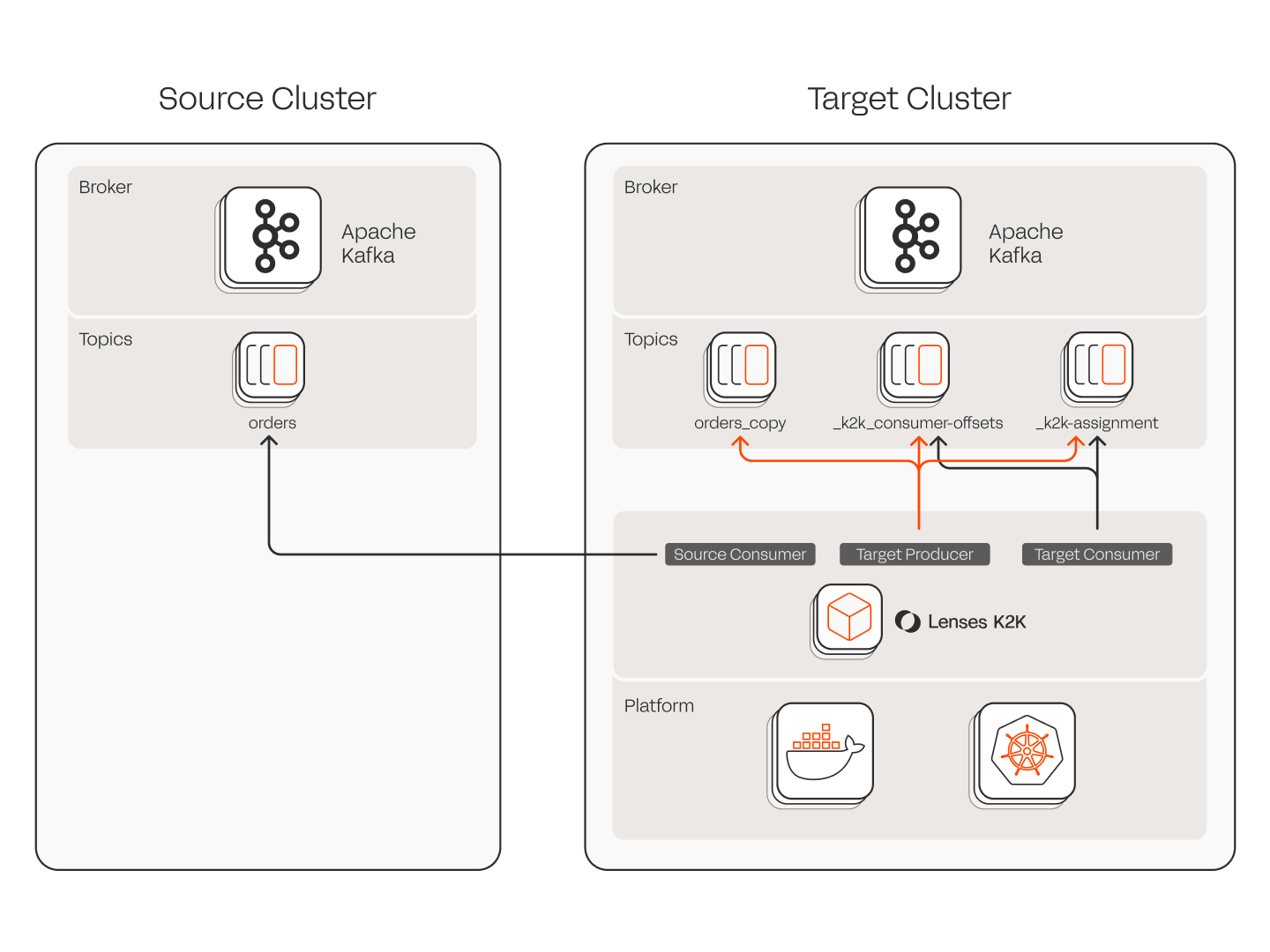

Lenses K2K is an application that allows you to replicate Kafka topics from one Kafka cluster to another.

Feedback

Found an issue? Feed it back to us at Github, on Slack, Ask Marios or email.

To execute K2K, you must agree to the EULA and secure a free license.

Accept the EULA by setting license.acceptEula to true .

What is K2K?

K2K (Kafka-to-Kafka) is a modern, standalone data replication engine for any Kafka API compatible implementation, designed for asynchronously replicating data between Apache Kafka clusters. It provides a robust and configurable pipeline to continuously mirror records, schemas, and topic configurations from a source cluster to a target cluster.

Key Architectural Features

K2K is designed around a set of core principles that make it a powerful and efficient solution for Kafka replication.

Cloud-Native Deployment: K2K is distributed as a lightweight Docker container, designed for deployment in cloud-native environments. It includes first-class support for Kubernetes, with an official Helm chart provided for simplified installation, configuration, and lifecycle management.

Horizontal Scalability: The application is designed to scale horizontally to handle high-throughput workloads. You can run multiple K2K instances in parallel, and they will automatically coordinate to distribute the replication workload across all available runners, allowing throughput to be scaled simply by adjusting the number of replicas.

Self-Contained and Frameworkless: Unlike solutions that rely on the Kafka Connect framework, K2K is a completely self-contained application. This results in a significantly smaller resource footprint, a simplified operational model, and eliminates the need to deploy and manage a separate, general-purpose Connect cluster.

Declarative Configuration: All aspects of a replication pipeline—from cluster connections and topic selection to advanced features like exactly-once semantics and error handling—are defined in a single, comprehensive YAML configuration file. This allows the entire replication topology to be managed as code and integrated into version control and CI/CD workflows.

Last updated

Was this helpful?